Going beyond the buzzword: What is Prompt Engineering?

Aug 23, 2023

When I first heard the term "prompt engineering", like many others, I believed this couldn't possibly be a real thing. How could it be? In a few seconds you can write a question to ChatGPT and immediately get an insightful answer.

I started implementing large language models (LLMs) into a side project, NetworkGPT, and discovered how nuanced it actually is to create a prompt that can be reliably used in a production application. Creating a prompt requires precision, creativity, and understanding the capabilities (and latency) of each model to provide meaningful results.

Prompt engineering is a new field and there isn’t a standardized definition yet. From my experience, here's how I would describe the role of a prompt engineer.

How do we define a prompt engineer?A prompt engineer carefully designs, crafts and test prompts that can be turned into a reliable library or API and integrated into an application. The “engineering” involves not only writing the prompt, but having a higher-level perspective, by focusing on managing the structure of all the prompts in the system, the latency, and accuracy of the answers.

Creating great prompts is a more artistic form of software engineering as there isn’t a clear right or wrong way to design a prompt. It however, does require a skill of knowing how to frame and decompose problems. This skill is a common theme in many fields of engineering.

An Example of the Nuances in Prompt Engineering

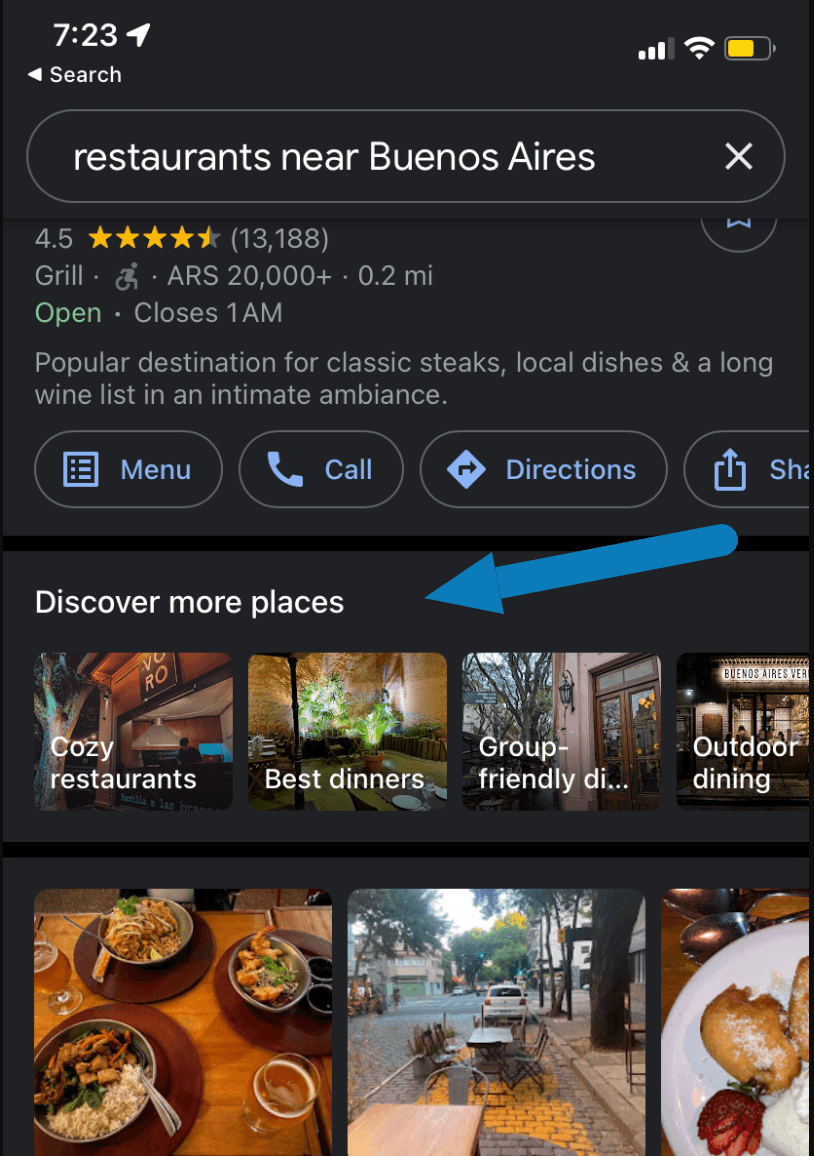

Let’s go through a tangible example to understand Prompt Engineering better. Let’s say we wanted to create an app that helps discover restaurants in a particular city by certain attributes. This is one of my favorite features available in Google Maps when I’m in a new city.

In the image above you’ll see Google has a section called “Discover more places” to find interesting restaurants in your local area. You’ll notice most of these categories are subjective: “Cozy restaurants”, “Best dinners”, “Group friendly”.

Let’s say your friendly, every-day CEO comes to you one day saying he wants to create a category called “Group friendly” restaurants into your app.

In engineering speak, he is basically asking -

> Can you determine if this particular restaurant is group friendly?

Once we can determine if one restaurant is group friendly we can build a database of all the group friendly restaurants in a particular city.

Unfortunately the question the way it is currently phrased is too subjective. Meaning the answer is based on someone's personal feeling or opinion rather than specific facts or evidence. When this is the case, then it is likely an LLM will not give you good results. A litmus test I use is - consider if you gave this question to a group of 10 people, would they generally give you a similar answer? If the answer is no, we likely need to rephrase the question (aka prompt).

Let’s dive into how we can be more precise, rephrase and decompose this into a prompt that an LLM may be able to evaluate.

Step 1: Rephrase the question to be more precise> Evaluate the “group friendliness” of this restaurant. We define group friendly as whether a restaurant can accommodate medium sized groups of people.

You’ll notice in the above prompt is still too subjective. Different people might have different opinions of what is a “medium sized” group.

Step 2: Rephrase the question (again) to be more preciseFor simplicity let’s assume we are using an LLM that can interpret both images and text. > You are assigned the task of evaluating the group friendliness of this restaurant. A group friendly restaurant is defined as a restaurant that has a significant number of tables that can support 4 people with a few tables being able to support at least 6 to 8 people.

Step 3: Decompose the question to smaller pieces, make each piece precise, for the LLM to evaluate subjectively

What should the LLM use to create its analysis? What information do we have …. perhaps pictures of the restaurants and reviews.

> You are assigned the task of evaluating the group friendliness of this restaurant based on the pictures and reviews given for a restaurant.

For each of the following questions, if yes, give it one point.

1. Does the restaurant have more than 10 tables?

2. Does it seem 25% of the tables can seat 4 or more people?

3. Is there at least one table supporting 8 people or it is clear you can easily combine two 4 person tables together?

Then use the reviews given to see if someone has mentioned. Give one point for each.

4. Has someone mentioned taking their group of friends to the restaurant and having a good time?

5. Has someone mentioned having a small event there, a birthday party for example?

Now we have given the LLM specific questions and have created a clear criteria of evaluation where it can give us a number between 0 and 5.

Next Steps as a Prompt Engineer

The example above shows the beginning stages of creating a prompt. In practice, the next steps would be to calibrate the prompt by trying a minimum of 5 to 10 examples to see if the output value matches what you expect. Then consider all the ways a problem can be phrased to reduce the ambiguity for misinterpretations by the LLM. Before deploying at scale, you’ll need to ensure you understand the cases the prompt fails and if that error rate is acceptable for your product, in addition to the cost / latency of running this analysis at scale.

For more advanced cases, a prompt engineer will start to develop a sense of the strengths of each type of LLM model. We personally found Google Bison to be great with reasoning, Claude is great with writing and GPT3.5 is great with general purpose tasks. Based on this you can optimize the cost, quality, and scale of your product.

Why this field is exploding

Google has had the above feature for many years, and at their scale they likely spent millions of dollars to create the AI models that could accurately categorize the restaurants according to these subjective criterias. One of the reasons for the popularity of LLMs is for the first time you can parse complex natural human language into specific attributes that you can program within hours or days. Essentially LLMs have democratized the use of AI by bringing the cost and time to market to a point where startups can rapidly create features, products and iterate on the customer experience.

Conclusions

While building NetworkGPT I needed to have a high level of understanding about the type of data I was feeding to my models and the acceptable risk of error for my users. It wasn’t a task that I could easily hire someone to solve for me. I suspect regulated and specialized industries, where errors have a high cost, prompt engineers will require in-depth expertise in that field. Most companies will need to find individuals that have an intersection between domain expertise, computer science, and an understanding of how to “talk to the LLMs”. Prompt engineering is not a passing trend. Yes, there is a hype indeed. However the need for dedicated professionals that understand the nuances and the “engineering” involved in this skill is real.

I would love to hear your feedback on this post and how you define prompt engineering. More articles about prompt engineering are coming soon.